Europe’s AI Act Moves Forward: What’s Happened, What’s Next, and How to Prepare for Compliance

Introduction

In December 2022, the Council of the European Union (Council) adopted its common position (Common Position) on the Artificial Intelligence Act (AI Act).1 The Council’s action marks a milestone on the EU’s path to comprehensive regulation of artificial intelligence (AI) systems, building on the restrictions on automated decision-making in the General Data Protection Regulation.2 The AI Act categorizes systems by risk and imposes highly prescriptive requirements on high-risk systems. In addition, the legislation has a broadly extraterritorial scope: it will govern both AI systems operating in the EU as well as foreign systems whose output enters the EU market. Companies all along the AI value chain need to pay close attention to the AI Act, regardless of their geographic location.

The European Commission (Commission) proposed the AI Act (EC Proposal) in April 2021.3 Since then, the EU’s co-legislators—the European Parliament (Parliament) and the Council—have separately considered potential revisions. The Council’s deliberations culminated in adoption of its “Common Position” (or version of the legislation). Parliament hopes to arrive at its position by the end of March.

After the Parliament adopts its position, the Council and Parliament will negotiate over the final text of the legislation along with the Commission, a process called the “trilogue.” In these negotiations, the parties will have to resolve some key disputes, including how to supervise law enforcement use of AI systems and, relatedly, how much latitude (if any) governments will have to use real-time and ex-post biometric recognition systems for law enforcement and national security. After the trilogue results in an agreed-upon text, that version will go before the Parliament and Council for final approvals.

In the meantime, there is enough clarity on the EU’s direction that businesses should begin considering whether their internal AI systems or AI-enabled products and services will be covered by the AI Act and, if so, what steps they will need to take for compliance.

The Council’s Major Changes

The Common Position contains several important changes from the EC Proposal.

Narrower Scope

The EC Proposal defined AI systems as: “software that is developed with one or more of the techniques and approaches listed in Annex I and can, for a given set of human-defined objectives, generate outputs such as content, predictions, recommendations, or decisions influencing the environments they interact with.”4 Critics have argued this definition is overly broad and sweeps in statistical and other processes used by businesses that are not generally considered to be AI.

In response, the Council revised the definition of an AI system:

a system that is designed to operate with elements of autonomy and that, based on machine and/or human-provided data and inputs, infers how to achieve a given set of objectives using machine learning and/or logic- and knowledge[-]based approaches, and produces system-generated outputs such as content (generative AI systems), predictions, recommendations or decisions, influencing the environments with which the AI system interacts.5

“[S]oftware” has been entirely removed from the definition while the concept of autonomous operation was added. In addition, the Council excluded “Statistical approaches, Bayesian estimation, search[,] and optimization methods,” limiting AI systems to those employing either machine learning or logic- and knowledge-based approaches.

The Council reinforced the importance of autonomy to the definition in a few ways. First, the Common Position removes from the definition of an AI system the categorization of the system as “software” and categorically excludes any system that uses “rules defined solely by natural persons to automatically execute operations.”6 Second, unlike the Commission Proposal, the Common Position introduces the concept of machine learning as one of several “approaches” an AI system may use to “achieve a given set of objectives . . . and produce[] system-generated outputs” such as content and decisions, and emphasizes that machine learning does not include “explicitly programm[ing a system] with a set of step-by-step instructions from input to output.”7 Third, the Common Position clarifies that “[l]ogic- and knowledge[-]based approaches . . . typically involve a knowledge base [usually encoded by human experts] and an inference engine [acting on and extracting information from the knowledge base] that generates outputs by reasoning on the knowledge base.”8

Beyond the definitions, the Common Position narrows the scope of the AI Act by excluding the use of AI systems and their outputs for the sole purpose of scientific research and development, for any R&D activity regarding AI systems, and for non-professional purposes.9 As discussed below, the Council also introduced various exemptions for national security and law enforcement.

Expansion of Prohibited Practices

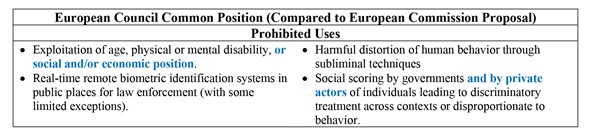

The EC Proposal of April 2021 proscribed four categories of AI uses: distortion of human behavior through subliminal techniques, behavior manipulation exploiting age or disability, real-time remote biometric identification by law enforcement, and governmental social scoring, defined as the evaluation or classification of the trustworthiness of a person based on their social behavior in multiple contexts or known or predicted personal or personality characteristics.

The Common Position makes two revisions to these prohibitions: it now bans behavior manipulation exploiting not only age or disability, but also a person’s social and/or economic situation, and now prohibits social scoring not only by governmental entities, but also by private actors.10 (In several charts below, we summarize selected aspects of the AI Act, showing the Council’s additions in bold blue text and deletions in struck-through bold red text.)

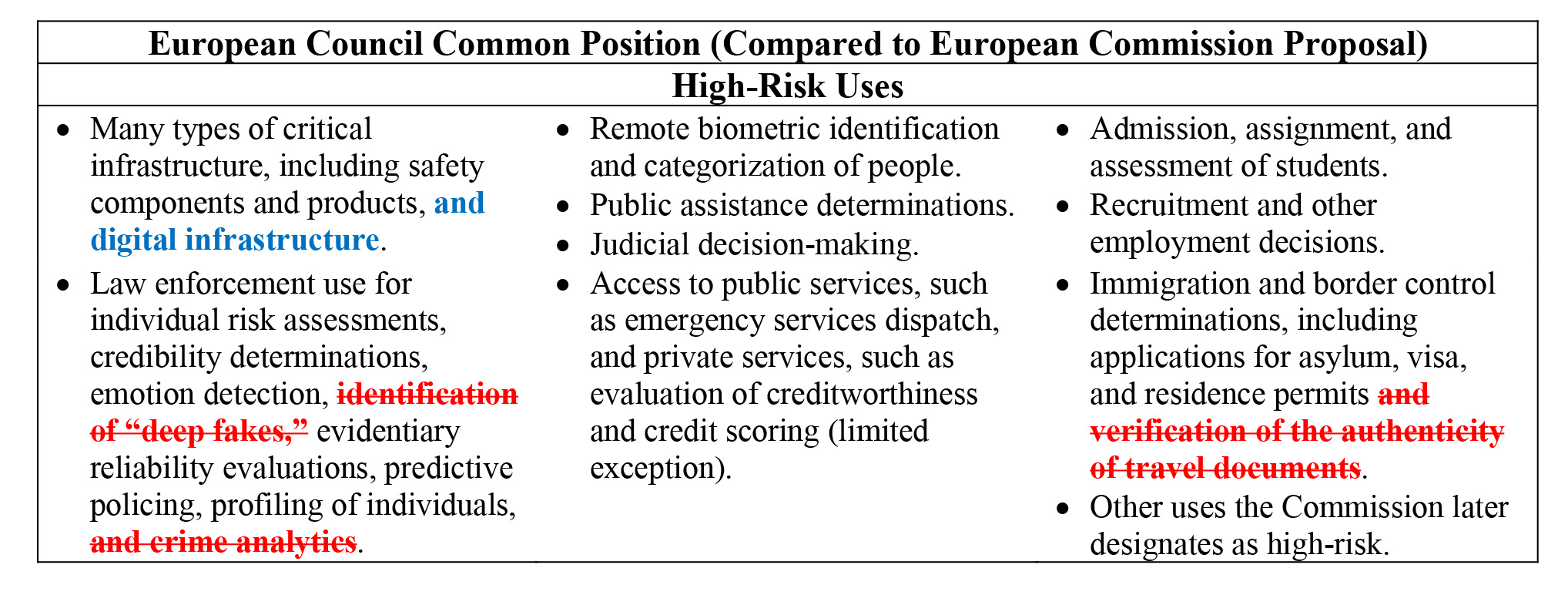

High-Risk AI Use Cases

The EC Proposal listed eight broad categories of high-risk uses while empowering the Commission to add to the list. While the Common Position retains the eight categories, it made other significant revisions, including adding “digital infrastructure” to its definition of critical infrastructure, adding life and health insurance as new high-risk uses, and removing deep fake detection by law enforcement, crime analytics, and verification of the authenticity of travel documents.11 Significantly, the Council would allow the Commission not only to add high-risk use cases, but also to delete certain high-risk use cases under certain conditions.12

Of course, the risk posed by an AI system depends upon how its output contributes to an ultimate action or decision. To prevent overdesignation of systems as high-risk, the Common Position requires consideration of whether the output of an AI system is “purely accessory in respect of the relevant action or decision to be taken and is not therefore likely to lead to a significant risk to the health, safety[,] or fundamental rights.”13 If so, the system is not high-risk.

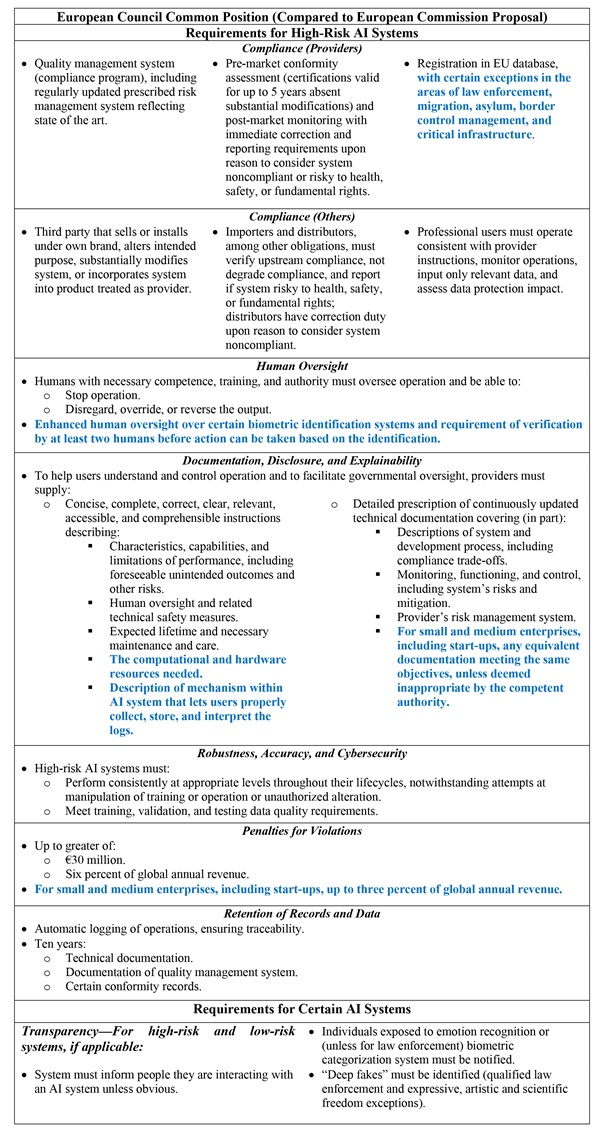

Requirements for High-Risk AI Systems

Once an AI system is classified as high-risk, the AI Act subjects it to numerous detailed requirements. The Common Position makes certain obligations clearer, more technically feasible, or less burdensome than they were under the EC Proposal.14 For example, it limits the risks subject to the risk management system requirements to “only those which may be reasonably mitigated or eliminated through the development or design of the high-risk AI system, or the provision of adequate technical information.”15 In addition, the Common Position would not require risk management systems to address risks under conditions of reasonably foreseeable misuse.16

New Plan to Address General Purpose AI Systems

The EC Proposal did not contemplate “general purpose AI”—AI systems that can be adapted for a variety of use cases, including cases that are high-risk. This omission has triggered much debate over how to classify general purpose AI and where in the value chain to place compliance obligations.

The Council addressed these concerns in a few ways. First, the Common Position introduces a definition of general purpose AI: “[A]n AI system that . . . is intended by the provider to perform generally applicable functions, such as image and speech recognition, . . . [and] in a plurality of contexts.”17

Second, the Common Position delegates to the Commission responsibility for determining how to apply the requirements for high-risk AI systems to general purpose AI systems that are used as high-risk AI systems by themselves or as parts of other high-risk AI systems. The Commission would do so by adopting “implementing acts.”18

Third, providers of such general purpose AI systems would have to comply with many of the obligations of providers of high-risk AI systems, but not all. For instance, they would not have to have a quality management system.19 However, they would have to “cooperate with and provide the necessary information to other providers” incorporating their systems into high-risk AI systems or components thereof to enable compliance by the latter providers.20 General purpose AI system providers can exempt themselves from these obligations altogether if they, in good faith considering the risks of misuse, explicitly exclude all high-risk uses in the instructions or other documentation accompanying their systems.21

These provisions do not apply to qualifying microenterprise, small-, or medium-sized providers of general purpose AI systems.22

Additional Carveouts for National Security and Law Enforcement

Much debate has centered on the degree to which the Artificial Intelligence Act should apply to national security and law enforcement uses of AI systems. The governments of EU states have sought to protect their flexibility, especially in exigent circumstances, and the Common Position reflects this objective. For instance, the Council expressly excluded use of AI systems for national security, defense, and military purposes from the scope of the legislation.23 The Common Position also exempts sensitive law enforcement data from collection, documentation, and analysis under the post-market monitoring system for high-risk AI systems.24 And the Common Position somewhat expands permissible law enforcement uses of real-time remote biometric identification systems.25

These changes set up what is expected to be perhaps the most difficult issue for resolution in the trilogue. The Parliament has been moving in the other direction—towards banning real-time remote biometric identification systems altogether26—and there has been speculation the Council added some exemptions for law enforcement as bargaining chips for the upcoming negotiations.27

Regulatory Sandboxes and Other Support for Innovation

The Common Position expands the regulatory support for innovation. It provides greater guidance for establishment of “regulatory sandboxes” that will allow innovative AI systems to be developed, trained, tested, and validated under supervision by regulatory authorities before commercial marketing or deployment. In addition, the Common Position allows for testing systems under real-world conditions both inside and outside the supervised regulatory sandboxes—the latter with various protections to prevent harms, including informed consent by participants and provisions for effective reversal or blocking of predictions, recommendations, or decisions by the tested system.28

The Council also exempted qualifying microenterprise providers of high-risk AI systems from the requirements for quality management systems.29

Emerging Global AI Regulation

Final passage of the AI Act (quite possibly later this year) will bring a massive increase in regulatory obligations for companies that develop, sell, procure, or use AI systems in connection with the EU. And other jurisdictions are stepping up their regulation of AI and other automated decision-making.

In the United States, the federal, state, and local governments all have focused on the risks AI poses. The leading bipartisan congressional privacy bill would regulate algorithmic decision-making.30 The Federal Trade Commission is considering how to formulate rules on automated decision-making (as well as privacy and data security)31 while other federal agencies are cracking down on algorithmic discrimination in employment,32 healthcare,33 housing,34 and lending.35 Meanwhile, California, Colorado, Connecticut, Virginia, and New York City have laws on automated decision-making that took effect on January 1 or will become effective this year.36

The Chinese Cyberspace Administration has adopted provisions governing algorithms that make decisions or create content while Shanghai and Shenzhen have enacted laws as well.37 The United Kingdom is working on its AI-governance strategy,38 the Canadian government has introduced the Artificial Intelligence and Data Act within broader legislation,39 and Brazil is developing its own AI regulatory legislation.40 Meanwhile, the EU, UK, Brazil, China, South Africa, and other countries already regulate automated decision-making systems under their privacy laws. Indeed, the Dutch Data Protection Authority recently announced plans to begin supervising AI and other algorithmic decision-making for transparency, discrimination, and arbitrariness under the GDPR and other laws.41

A Practical Approach to Global Compliance

The AI Act’s final contours remain uncertain, as does the exact shape of AI regulation in other jurisdictions. Yet, we have enough clarity for companies to ready their global compliance programs.

A company can begin by taking stock of the systems it develops, distributes, or uses to make automated decisions affecting individuals or safe operations. Then, assess the risks each one poses. For help in spotting risks, adapt a checklist such as The Assessment List for Trustworthy AI42 to the particulars of the business and system. No single person or team may have a full understanding of how the system was created, trained, and tested, so probe assumptions and dependencies on work performed by others. Take care to vet each component of the system, including components purchased from vendors, because they may introduce risks, too.

Next, the business should mitigate the identified risks reasonably. There are many aspects to mitigating risks from automated decisions.

Explainability is a good starting point. Explanation facilitates appeal of an adverse outcome that does not make sense or acceptance of the result if it does. Moreover, having a wide range of explanations for a system eases oversight and gives the company’s leaders assurance the system is accurate and satisfies regulatory obligations and business objectives. Explaining Decisions Made with AI by the UK Information Commissioner’s Office and The Alan Turing Institute43 provides practical advice on explainability.

After explainability, focus on bias. The Algorithmic Bias Playbook44 is a useful guide for bias audits, which require social-scientific understanding and value judgments, not just good engineering. “[T]here is no simple metric to measure fairness that a software engineer can apply. . . . Fairness is a human, not a mathematical, determination.”45 When an audit reveals disparate impacts against protected classes, the company’s lawyers will have to determine whether the distinctions may be justified by bona fide business reasons under the applicable laws. Even if so, leadership should contemplate whether the justification reflects the company’s values.

In addition to explainability and bias, a global AI compliance program should address documentation. For high-risk AI systems, the AI Act will mandate extensive documentation and recordkeeping about the system’s development, risk management and mitigation, and operations. Other AI laws—both adopted and proposed—have similar requirements. Even where not legally required, a business may choose to document how their AI and other automated decision-making systems were developed, trained, tested, and used. Records of reasonable risk mitigation can help defend against government investigations or private litigation over a system’s mistaken decisions. (In the fall, the Commission proposed a directive on civil liability for AI systems; once adopted, it will be easier for Europeans harmed by AI systems to obtain compensation.46) Of course, greater document retention has downsides, so companies need to strike the right balance if going beyond legal requirements.

For a comprehensive approach to managing AI risks, consult the Artificial Intelligence Risk Management Framework (AI RMF) recently released by the US National Institute of Standards and Technology (NIST).47 Accompanying the AI RMF is NIST’s AI RMF Playbook (which remains in draft).48 The AI RMF Playbook provides a recommended program for governing, mapping, measuring, and managing AI risks. While prepared by a US agency, the AI RMF and AI RMF Playbook are intended to “[b]e law- and regulation-agnostic.”49 They should support a global enterprise’s compliance with laws and regulations across jurisdictions.

Conclusion

The Council’s Common Position brings the AI Act one big step closer to adoption. Parliament’s passage of its version and then the trilogue negotiations remain ahead this year. Still, the final legislation is likely to include highly prescriptive regulation of high-risk AI systems. Companies should do what they can now to protect against the regulatory and litigation risks that are beginning to materialize—if they want to stay ahead of the curve.

© Arnold & Porter Kaye Scholer LLP 2023 All Rights Reserved. This Advisory is intended to be a general summary of the law and does not constitute legal advice. You should consult with counsel to determine applicable legal requirements in a specific fact situation.

-

Council Common Position, 2021/0106 (COD), Proposal for a Regulation of the European Parliament and of the Council—Laying Down Harmonised Rules on Artificial Intelligence (Artificial Intelligence Act) and Amending Certain Union Legislative Acts - General Approach (Common Position).

-

General Regulation (EU) 2016/679 of the European Parliament and of the Council of 27 April 2016 on the Protection of Natural Persons with Regard to the Processing of Personal Data and on the Free Movement of Such Data, and Repealing Directive 95/46/EC (General Data Protection Regulation), OJ 2016 L 119/1.

-

Commission Proposal for a Regulation of the European Parliament and of the Council Laying Down Harmonised Rules on Artificial Intelligence (Artificial Intelligence Act) and Amending Certain Union Legislative Acts, COM (2021) 206 final (Apr. 21, 2021) (EC Proposal).

-

Annex I lists three categories: “(a) Machine learning approaches, including supervised, unsupervised[,] and reinforcement learning, using a wide variety of methods including deep learning; (b) Logic- and knowledge-based approaches, including knowledge representation, inductive (logic) programming, knowledge bases, inference and deductive engines, (symbolic) reasoning[,] and expert systems; (c) Statistical approaches, Bayesian estimation, search[,] and optimization methods.”

-

-

Compare id. art. 3(1), Recital (6) with EC Proposal art. 3(1).

-

Compare Common Position art. 3(1), Recital (6a) with EC Proposal art. 3(1).

-

Compare Common Position art. 3(1), Recital (6b) with EC Proposal art. 3(1).

-

Compare Common Position art. 2 with EC Proposal art. 2.

-

Compare Common Position art. 5(1)(b) with EC Proposal art. 5(1)(b).

-

Compare Common Position Annex III(5)(d) and Annex III(6) with EC Proposal Annex III(5) and Annex III(6).

-

Compare Common Position art. 7(3) with EC Proposal art. 7.

-

-

For an example of how the requirements relating to quality of data have become clearer and more technically feasible, compare Common Position art. 10(2)(b), (f), 10(6) with EC Proposal art. 10(2)(b), (f), 10(6). For an example of how the requirements relating to technical documentation are more technically feasible, compare Common Position arts. 4b(4), 11(1) with EC Proposal art. 11(1); see also Common Position arts. 13, 14, 23a.

-

Compare Common Position art. 9(2) with EC Proposal art. 9(2).

-

Compare Common Position art. 9(2)(b), 9(4) with EC Proposal art. 9(2)(b), 9(4).

-

-

-

-

-

-

-

-

-

Compare id. art. 5(1)(d) with EC Proposal art. 5(1)(d).

-

See, e.g., Luca Bertuzzi, AI Act: EU Parliament’s discussions heat up over facial recognition, scope, Euractiv (Oct. 6, 2022), available here; Luca Bertuzzi, AI regulation filled with thousands of amendments in the European Parliament, Euractiv (Jun. 2, 2022), available here.

-

See Luca Bertuzzi, EU countries adopt a common position on Artificial Intelligence rulebook, Euractiv (Dec. 6, 2022), available here.

-

-

-

See American Data Privacy and Protection Act, H.R. 8152, § 207 (117th Cong.).

-

See Peter J. Schildkraut et al., Major Changes Ahead for the Digital Economy? What Companies Should Know About FTC’s Privacy, Data Security and Algorithm Rulemaking Proceeding, Arnold & Porter (Aug. 30, 2022), available here.

-

See Alexis Sabet et al., EEOC’s Draft Enforcement Plan Prioritizes Technology-Related Employment Discrimination, Arnold & Porter: Enforcement Edge (Jan. 20, 2023), available here; Allon Kedem et al., Avoiding ADA Violations When Using AI Employment Technology, Bloomberg Law (June 6, 2022), available here.

-

See Allison W. Shuren et al., HHS Proposes Rules Prohibiting Discriminatory Health Care-Related Activities, Arnold & Porter (Aug. 18, 2022), available here.

-

See United States v. Meta Platforms, Inc., No. 1:22-cv-05187-JGK (S.D.N.Y June 27, 2022).

-

See Richard Alexander et al., CFPB Updates UDAAP Exam Manual to Target Discrimination, Arnold & Porter (Mar. 28, 2022), available here.

-

See Peter J. Schildkraut and Jami Vibbert, Preparing Your Regulatory Compliance Program for 2023, Law360 (Oct. 4, 2022), available here.

-

See Peter J. Schildkraut et al., Have Your Websites and Online Services Become Unlawful in China?, Corp. Counsel (Mar. 31, 2022), available here.

-

See Jacqueline Mulryne and Peter J. Schildkraut, UK Proposes New Pro-Innovation Framework for Regulating AI, Arnold & Porter: Enforcement Edge (July 26, 2022), available here; James Castro-Edwards and Peter J. Schildkraut, UK Regulators Seek Architectural Advice as They Lay the Foundation for Governing Algorithms, Arnold & Porter (May 20, 2022), available here.

-

See Peter J. Schildkraut et al., Global AI Regulation: Canadian Edition, Arnold & Porter (Aug. 29, 2022), available here.

-

See Agência Senado, Comissão do marco regulatório da inteligência artificial estende prazo para sugestões [Artificial Intelligence Regulatory Framework Commission Extends Deadline for Suggestions], Senado Federal (Nov. 5, 2022), available here.

-

See Contouren algoritmetoezicht AP naar Tweede Kamer [Dutch Data Protection Authority to Send Outline of AI Regulation to the House of Representatives], Autoriteit Persoonsgegevens (Dec. 22, 2022), available here.

-

Assessment List for Trustworthy Artificial Intelligence (ALTAI) for Self-Assessment, European Comm’n (Jul. 17, 2020), available here.

-

UK Info. Comm’r’s Office & The Alan Turing Inst., Explaining Decisions Made with AI (May 20, 2020), available here.

-

Ziad Obermeyer et al., Algorithmic Bias Playbook, Chicago Booth Ctr. for Applied Artificial Intelligence (June 2021), available here.

-

Nicol Turner Lee et al., Algorithmic Bias Detection and Mitigation: Best Practices and Policies to Reduce Consumer Harms, Brookings (May 22, 2019), available here.

-

See Commission Proposal for a Directive of the European Parliament and of the Council on Adapting Non-Contractual Civil Liability Rules to Artificial Intelligence, COM(2022) 496 final (Sept. 28, 2022).

-

US Nat’l Inst. of Standards & Tech., Artificial Intelligence Risk Management Framework (AI RMF 1.0) (Jan. 2023), available here.

-

US Nat’l Inst. of Standards & Tech., NIST AI Risk Management Framework Playbook, available here.

-